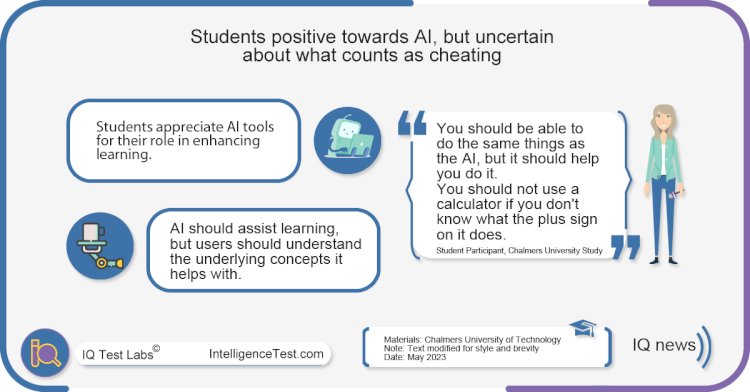

Students positive towards AI, but uncertain about what counts as cheating

Swedish students' attitudes towards AI in education - positive yet cautious.

Summary

Students in Sweden are positively inclined towards AI tools like ChatGPT in education but are uncertain about its use in exams, according to a survey by Chalmers University.

Introduction

The role of AI in education sparks diverse and emotionally charged views among students, as revealed by a first-of-its-kind survey conducted by Chalmers University. This study focused on how Swedish students perceive the use of AI tools such as ChatGPT in their learning environments.

Main points

- A majority of students believe that AI tools, specifically ChatGPT, enhance their efficiency and improve their academic writing and language skills.

- There's a sense of uncertainty and anxiety among students about the boundary between using AI as a learning tool and using it to cheat, particularly in exams.

- Over half the students are unaware if their educational institution has guidelines for responsible AI use. However, a significant majority oppose a ban on AI in education.

- AI is seen as a valuable aid for students with disabilities, aiding them in overcoming certain learning challenges.

Conclusion

The survey provides invaluable insights into student perspectives on AI's role in education, underlining the potential benefits and the need for clear guidelines on its use. While students welcome AI's supportive role in learning, the establishment of boundaries for its application is essential to ensure ethical use and to address concerns around cheating.

Researcher quotations

We've shown that people can train themselves to recognize machine-generated texts. People start with a certain set of assumptions about what sort of errors a machine would make, but these assumptions aren't necessarily correct. Over time, given enough examples and explicit instruction, we can learn to pick up on the types of errors that machines are currently making.

- Chris Callison-Burch, Associate Professor in the Department of Computer and Information Science (CIS)

AI today is surprisingly good at producing very fluent, very grammatical text, but it does make mistakes. We prove that machines make distinctive types of errors - common-sense errors, relevance errors, reasoning errors and logical errors, for example - that we can learn how to spot.

- Liam Dugan Ph.D. student in CIS

Materials provided by: Chalmers University of Technology

Note: Text modified for style and brevity

Date: May 2023